AI music tools usually start on a laptop where you type a prompt and wait for a track. That workflow feels distant from how bands write songs, trading groove and chemistry for text boxes and genre presets. MUSE asks what AI music looks like if it starts from playing instead of typing, treating the machine as a bandmate that listens and responds rather than a generator you feed instructions.

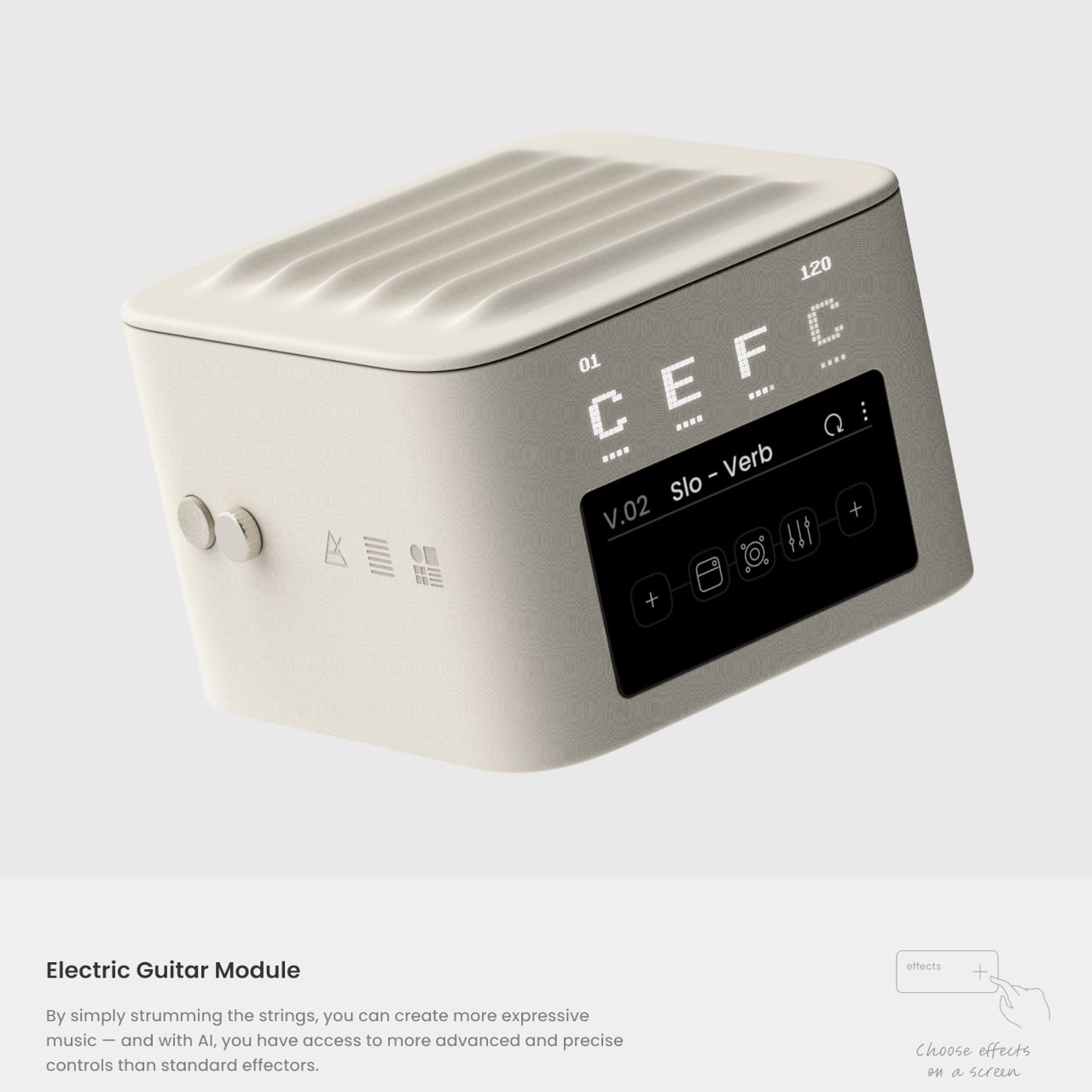

MUSE is a next-generation AI music module system designed for band musicians. It is not one box but a family of modules, vocal, drum, bass, synthesizer, and electric guitar, each tuned to a specific role. You feed each one ideas the way you would feed a bandmate, and the AI responds in real time, filling out parts and suggesting directions that match what you just played.

Designers: Hyeyoung Shin, Dayoung Chang

A band rehearsal where each member has their own module means the drummer taps patterns into the drum unit, the bassist works with the bass module to explore grooves, and the singer hums into the vocal module to spin melodies out of half-formed ideas. Instead of staring at a screen, everyone is still moving and reacting, but there is an extra layer of AI quietly proposing fills, variations, and harmonies.

MUSE is built around the idea that timing, touch, and phrasing carry information that text prompts miss. Tapping rhythms, humming lines, or strumming chords lets the system pick up on groove and style, not just genre labels. Those nuances feed the AI’s creative process, so what comes back feels more like an extension of your playing than a generic backing track cobbled together from preset patterns.

The modules can be scattered around a home rather than living in a studio. One unit near the bed for late-night vocal ideas, another by the desk for quick guitar riffs between emails, a drum module on the coffee table for couch jams. Because they look like small colorful objects rather than studio gear, they can stay out, ready to catch ideas without turning the house into a control room.

Each module’s color and texture match its role: a plush vocal unit, punchy drum block, bright synth puck, making them easy to grab and easy to live with. They read more like playful home objects than intimidating equipment, which lowers the barrier to experimenting. Picking one up becomes a small ritual, a way to nudge yourself into making sound instead of scrolling or staring at blank sessions.

MUSE began with the question of how creators can embrace AI without losing their identity. The answer it proposes is to keep the musician’s body and timing at the center, letting AI listen and respond rather than dictate. It treats AI as a bandmate that learns your groove over time, not a replacement, and that shift might be what keeps humans in the loop as the tools get smarter.

The post These 5 AI Modules Listen When You Hum, Tap, or Strum, Not Type first appeared on Yanko Design.

from Yanko Design

0 Comments